the following changes have been pushed to bugzilla.mozilla.org:

discuss these changes on mozilla.tools.bmo.

Hello Mozillians,

We are happy to announce that Friday, February 5th, we are organizing Firefox 45.0 Beta 3 Testday. We will be focusing our testing on the following features: Search Refactoring, Synced Tabs Menu, Text to Speech and Grouped Tabs Migration. Check out the detailed instructions via this etherpad.

No previous testing experience is required, so feel free to join us on #qa IRC channel where our moderators will offer you guidance and answer your questions.

Join us and help us make Firefox better! See you on Friday!

the following changes have been pushed to bugzilla.mozilla.org:

discuss these changes on mozilla.tools.bmo.

This article has been coauthored by Aislinn Grigas, Senior Interaction Designer, Firefox Desktop

Cross posting with Mozilla’s Security Blog

November 3, 2015

Over the past few months, Mozilla has been improving the user experience of our privacy and security features in Firefox. One specific initiative has focused on the feedback shown in our address bar around a site’s security. The major changes are highlighted below along with the rationale behind each change.

Color and iconography is commonly used today to communicate to users when a site is secure. The most widely used patterns are coloring a lock icon and parts of the address bar green. This treatment has a straightforward rationale given green = good in most cultures. Firefox has historically used two different color treatments for the lock icon – a gray lock for Domain-validated (DV) certificates and a green lock for Extended Validation (EV) certificates. The average user is likely not going to understand this color distinction between EV and DV certificates. The overarching message we want users to take from both certificate states is that their connection to the site is secure. We’re therefore updating the color of the lock when a DV certificate is used to match that of an EV certificate.

Although the same green icon will be used, the UI for a site using EV certificates will continue to differ from a site using a DV certificate. Specifically, EV certificates are used when Certificate Authorities (CA) verify the owner of a domain. Hence, we will continue to include the organization name verified by the CA in the address bar.

A second change we’re introducing addresses what happens when a page served over a secure connection contains Mixed Content. Firefox’s Mixed Content Blocker proactively blocks Mixed Active Content by default. Users historically saw a shield icon when Mixed Active Content was blocked and were given the option to disable the protection.

Since the Mixed Content state is closely tied to site security, the information should be communicated in one place instead of having two separate icons. Moreover, we have seen that the number of times users override mixed content protection is slim, and hence the need for dedicated mixed content iconography is diminishing. Firefox is also using the shield icon for another feature in Private Browsing Mode and we want to avoid making the iconography ambiguous.

The updated design that ships with Firefox 42 combines the lock icon with a warning sign which represents Mixed Content. When Firefox blocks Mixed Active Content, we retain the green lock since the HTTP content is blocked and hence the site remains secure.

For users who want to learn more about a site’s security state, we have added an informational panel to further explain differences in page security. This panel appears anytime a user clicks on the lock icon in the address bar.

Previously users could click on the shield icon in the rare case they needed to override mixed content protection. With this new UI, users can still do this by clicking the arrow icon to expose more information about the site security, along with a disable protection button.

There is a second category of Mixed Content called Mixed Passive Content. Firefox does not block Mixed Passive Content by default. However, when it is loaded on an HTTPS page, we let the user know with iconography and text. In previous versions of Firefox, we used a gray warning sign to reflect this case.

We have updated this iconography in Firefox 42 to a gray lock with a yellow warning sign. We degrade the lock from green to gray to emphasize that the site is no longer completely secure. In addition, we use a vibrant color for the warning icon to amplify that there is something wrong with the security state of the page.

We also use this iconography when the certificate or TLS connection used by the website relies on deprecated cryptographic algorithms.

The above changes will be rolled out in Firefox 42. Overall, the design improvements make it simpler for our users to understand whether or not their interactions with a site are secure.

We have made similar changes to the site security indicators in Firefox for Android, which you can learn more about here.

Firefox for Windows, Mac and Linux now lets you choose to receive push notifications from websites if you give them permission. This is similar to Web notifications, except now you can receive notifications for websites even when they’re not loaded in a tab. This is super useful for websites like email, weather, social networks and shopping, which you might check frequently for updates.

You can manage your notifications in the Control Center by clicking the ![]() icon on the left side of the address bar.

icon on the left side of the address bar.

Push Notifications for Web Developers

To make this functionality possible, Mozilla helped establish the Web Push W3C standard that’s gaining momentum across the Web. We also continue to explore the new design pattern known as Progressive Web Apps. If you’re a developer who wants to implement push notifications on your site, you can learn more in this Hacks blog post.

More information:

This is a quick write up to summarize my, and Jeff’s, experience, using RR to debug a fairly rare intermittent reftest failure. There’s still a lot of be learned about how to use RR effectively so I’m hoping sharing this will help others.

First given a offending pixel I was able to set a breakpoint on it using these instructions. Next using rr-dataflow I was able to step from the offending bad pixel to the display item responsible for this pixel. Let me emphasize this for a second since it’s incredibly impressive. rr + rr-dataflow allows you to go from a buffer, through an intermediate surface, to the compositor on another thread, through another intermediate surface, back to the main thread and eventually back to the relevant display item. All of this was automated except for when the two pixels are blended together which is logically ambiguous. The speed at which rr was able to reverse continue through this execution was very impressive!

Here’s the trace of this part: rr-trace-reftest-pixel-origin

From here I started comparing a replay of a failing test and a non failing step and it was clear that the DisplayList was different. In one we have a nsDisplayBackgroundColor in the other we don’t. From here I was able to step through the decoder and compare the sequence. This was very useful in ruling out possible theories. It was easy to step forward and backwards in the good and bad replay debugging sessions to test out various theories about race conditions and understanding at which part of the decode process the image was rejected. It turned out that we sent two decodes, one for the metadata that is used to sized the frame tree and the other one for the image data itself.

In hindsight, it would have been more effective to start debugging this test by looking at the frame tree (and I imagine for other tests looking at the display list and layer tree) first would have been a quicker start. It works even better if you have a good and a bad trace to compare the difference in the frame tree. From here, I found that the difference in the layer tree came from a change hint that wasn’t guaranteed to come in before the draw.

The problem is now well understood: When we do a sync decode on reftest draw, if there’s an image error we wont flush the style hints since we’re already too deep in the painting pipeline.

In the last two weeks, we landed 130 PRs in the Servo organization’s repositories.

After months of work by vlad and many others, Windows support landed! Thanks to everyone who contributed fixes, tests, reviews, and even encouragement (or impatience!) to help us make this happen.

geckolib target to our CIScreencast of this post being upvoted on reddit… from Windows!

We had a meeting on some CI-related woes, documenting tags and mentoring, and dependencies for the style subsystem.

The Monday Project Meeting

The Monday Project Meeting

With the release of Firefox 44, we are pleased to welcome the 28 developers who contributed their first code change to Firefox in this release, 23 of whom were brand new volunteers! Please join us in thanking each of these diligent and enthusiastic individuals, and take a look at their contributions:

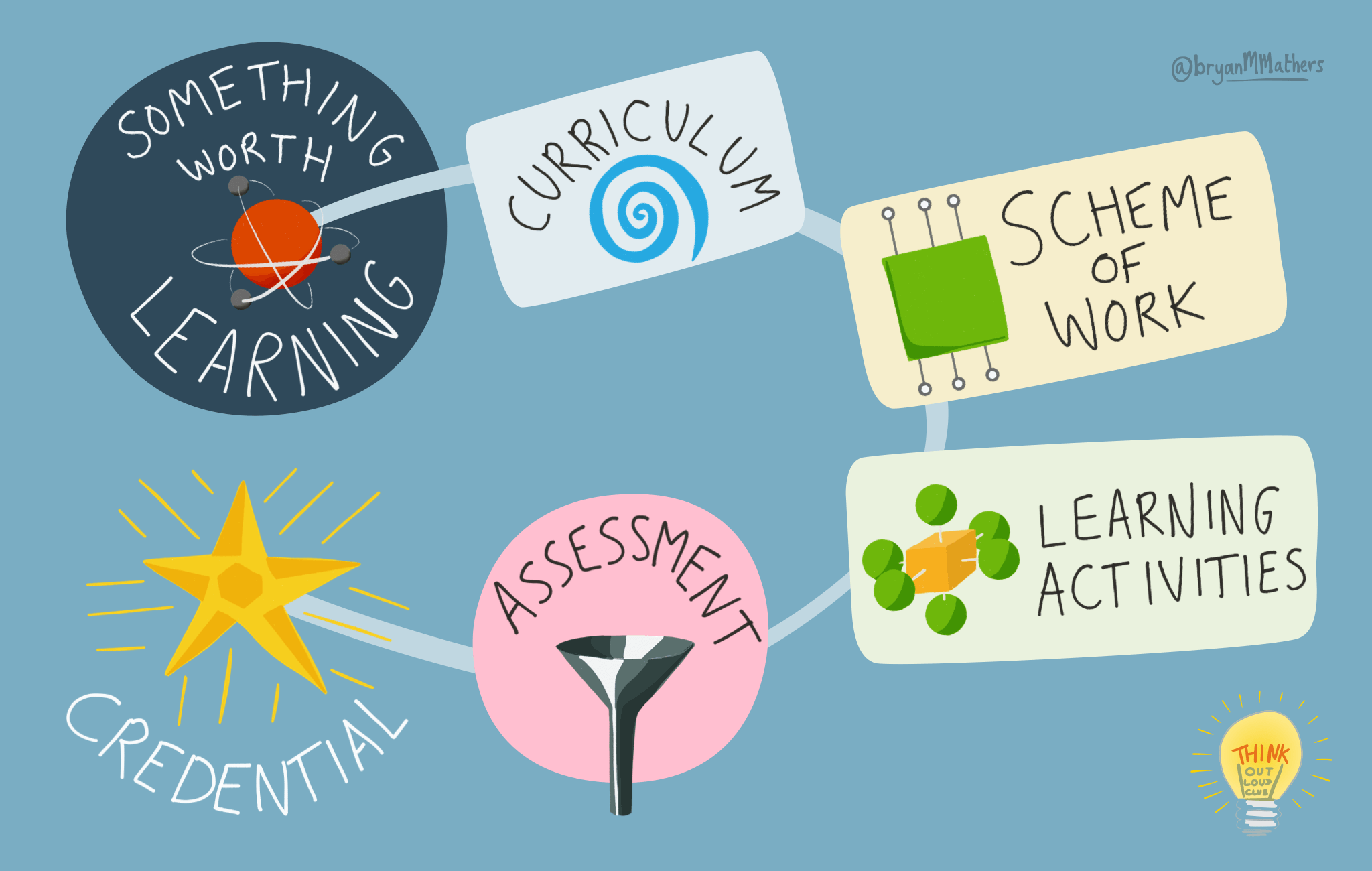

The image above was created by Bryan Mathers for our presentation at BETT last week. It shows the way that, in broad brushstrokes, learning design should happen. Before microcredentials such as Open Badges this was a difficult thing to do as both the credential and the assessment are usually given to educators. The flow tends to go backwards from credentials instead of forwards from what we want people to learn.

But what if you really were starting from scratch? How could you design a digital skills framework that contains knowledge, skills, and behaviours worth learning? Having written my thesis on digital literacies and led Mozilla’s Web Literacy Map for a couple of years, I’ve got some suggestions.

One of the most important things to define is who your audience is for your digital skills framework. Is it for learners to read? Who are they? How old are they? Are you excluding anyone on purpose? Why / why not?

You might want to do some research and work around user personas as part of a user-centred design approach. This ensures you’re designing for real people instead of figments of your imagination (or, worse still, in line with your prejudices).

It’s also good practice to make the language used in the skills framework as precise as possible. Jargon is technical language used for the sake of it. There may be times when it’s impossible not to use a word (e.g. ’meme’). If you do this then link to a definition or include a glossary. It’s also useful to check the ‘reading level’ of your framework and, if you really want a challenge, try using Up-Goer Five language.

It’s extremely easy, when creating a framework for learning, to fall into the 'knowledge trap’. Our aim when creating the raw materials from which someone can build a curriculum is to focus on action. Knowledge should make a difference in practice.

One straightforward way to ensure that you’re focusing on action rather than head knowledge is to use verbs when constructing your digital skills framework. If you’re familiar with Bloom’s Taxonomy, then you may find The Differentiator useful. This pairs verbs with the various levels of Bloom’s.

A framework needs to be a living, breathing thing. It should be subject to revision and updated often. For this reason, you should add version numbers to your documentation. Ideally, the latest version should be at a canonical URL and you should archive previous versions to static URLs.

I would also advise releasing the first version of your framework not as 'version 1.0’ but as 'v0.1’. This shows that you’re willing for others to provide input, that there will be further versions, and that you know you haven’t got it right first time (and forevermore).

Questions? Comments? Ask me on Twitter (@dajbelshaw). I also consult around this kind of thing, so hit me up on hello@dynamicskillset.com

Tonight is Robbie Burns night, in honour of that great Scottish poet. But tonight had me thinking about another night in my past.

It was about 5 years ago, maybe less, I struggle to remember now. I was in the UK visiting family and my Dad was sick. Cancer and it's treatment is tough, you have good weeks, you have bad weeks and you have really fucking bad weeks. This was a good week and for some reason I was in the UK.

Myself, my brother and my sister-in-law went down to see him that night. It was Robbie Burns night and that meant an excuse for haggis, really, truly terrible scotch, Scottish dancing and all that. There are many times when I look back at time with my Dad in those last few years. This was definitely one of those times. He was my Dad at his best, cracking jokes and having fun. Living life to the absolute fullest, while you still have that chance.

We had a great night. That ended way too soon.

Not long after that the cancer came back and that was that.

But suddenly tonight, in a bar in Portland I had these memories of my Dad in a waistcoat cracking jokes and having fun on Robbie Burns night. No-one else in the bar seemed to know what night it was. You'd think Robbie Burns night might get a little bit more appreciation, but hey.

In the many years I've been running this blog I've never written about my Dad passing away. Here's the first time. I miss him.

Hey Robbie Burns? Thanks for making me remember that night.

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us an email! Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

This week's edition was edited by: nasa42, brson, and llogiq.

Announcing Rust 1.6.

Announcing Rust 1.6.

129 pull requests were merged in the last week.

See the triage digest and subteam reports for more details.

cargo init.btree_set::{IntoIter, Iter, Range} covariant.slice::binary_search.std::sync::mpsc: Add fmt::Debug stubs.Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

[ to the FOLLOW(ty) in macro future-proofing rules.RangeInclusive to use an enum.Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

IndexAssign trait that allows overloading "indexed assignment" expressions like a[b] = c.alias attribute to #[link] and -l.unreachable and a lint.If you are running a Rust event please add it to the calendar to get it mentioned here. Email Erick Tryzelaar or Brian Anderson for access.

Tweet us at @ThisWeekInRust to get your job offers listed here!

This week's Crate of the Week is racer which powers code completion in all Rust development environments.

Thanks to Steven Allen for the suggestion.

Submit your suggestions for next week!

Memory errors are fundamentally state errors, and Rust's move semantics, borrowing, and aliasing XOR mutating help enormously for me to reason about how my program changes state as it executes, to avoid accidental shared state and side effects at a distance. Rust more than any other language I know enables me to do compiler driven design. And internalizing its rules has helped me design better systems, even in other languages.

— desiringmachines on /r/rust.

Thanks to dikaiosune for the suggestion.

Speaking of, downloadable fonts were exactly the same problem on the Sun Ultra-3 laptop I've been refurbishing; Oracle still provides a free Solaris 10 build of 38ESR, but it crashes on web fonts for reasons I have yet to diagnose, so I just have them turned off. Yes, it really is a SPARC laptop, a rebranded Tadpole Viper, and I think the fastest one ever made in this form factor (a 1.2GHz UltraSPARC IIIi). It's pretty much what I expected the PowerBook G5 would have been -- hot, overthrottled and power-hungry -- but Tadpole actually built the thing and it's not a disaster, relatively speaking. There's no JIT in this Firefox build, the brand new battery gets only 70 minutes of runtime even with the CPU clock-skewed to hell, it stands a very good chance of rendering me sterile and/or medium rare if I actually use it in my lap and it had at least one sudden overtemp shutdown and pooped all over the filesystem, but between Firefox, Star Office and pkgsrc I can actually use it. More on that for laughs in a future post.

It has been pointed out to me that Leopard Webkit has not made an update in over three months, so hopefully Tobias is still doing okay with his port.

Last year, we unveiled the Mozilla Open Software Patent License as part of our Initiative to help limit the negative impacts that patents have on open source software. While those were an important first step for us, we continue to do more. This past Wednesday, Mozilla joined several other tech and software companies in filing an amicus brief with the Supreme Court of the United States in the Halo and Stryker cases.

In the brief, we urge the Court to limit the availability of treble damages. Treble damages are significant because they greatly increase the amount of money owed if a defendant is found to “willfully infringe” a patent. As a result, many open source projects and technology companies will refuse to look into or engage in discussions about patents, in order to avoid even a remote possibility of willful infringement. This makes it very hard to address the chilling effects that patents can have on open source software development, open innovation, and collaborative efforts.

We hope that our brief will help the Court see how this legal standard has affected technology companies and persuade the Court to limit treble damages.

In Firefox 43, we made it a default requirement for add-ons to be signed. This requirement can be disabled by toggling a preference that was originally scheduled to be removed in Firefox 44 for release and beta versions (this preference will continue to be available in the Nightly, Developer, and ESR Editions of Firefox for the foreseeable future).

We are delaying the removal of this preference to Firefox 46 for a couple of reasons: We’re adding a feature in Firefox 45 that allows temporarily loading unsigned restartless add-ons in release, which will allow developers of those add-ons to use Firefox for testing, and we’d like this option to be available when we remove the preference. We also want to ensure that developers have adequate time to finish the transition to signed add-ons.

The updated timeline is available on the signing wiki, and you can look up release dates for Firefox versions on the releases wiki. Signing will be mandatory in the beta and release versions of Firefox from 46 onwards, at which point unbranded builds based on beta and release will be provided for testing.

Releng: drinkin’ wine and makin’ pies.

Releng: drinkin’ wine and makin’ pies.Modernize infrastructure:

In a continuing effort to enable faster, more reliable, and more easily-run tests for TaskCluster components, Dustin landed support for an in-memory, credential-free mock of Azure Table Storage in the azure-entities package. Together with the fake mock support he added to taskcluster-lib-testing, this allows tests for components like taskcluster-hooks to run without network access and without the need for any credentials, substantially decreasing the barrier to external contributions.

All release promotion tasks are now signed by default. Thanks to Rail for his work here to help improve verifiability and chain-of-custody in our upcoming release process. (https://bugzil.la/1239682) Beetmover has been spotted in the wild! Jordan has been working on this new tool as part of our release promotion project. Beetmover helps move build artifacts from one place to another (generally between S3 buckets these days), but can also be extended to perform validation actions inline, e.g. checksums and anti-virus. (https://bugzil.la/1225899)

Dustin configured the “desktop-test” and “desktop-build” docker images to build automatically on push. That means that you can modify the Dockerfile under `testing/docker`, push to try, and have the try job run in the resulting image, all without pushing any images. This should enable much quicker iteration on tweaks to the docker images. Note, however, that updates to the base OS images (ubuntu1204-build and centos6-build) still require manual pushes.

Mark landed Puppet code for base windows 10 support including secrets and ssh keys management.

Improve CI pipeline:

Vlad and Amy repurposed 10 Windows XP machines as Windows 7 to improve the wait times in that test pool (https://bugzil.la/1239785) Armen and Joel have been working on porting the Gecko tests to run under TaskCluster, and have narrowed the failures down to the single digits. This puts us on-track to enable Linux debug builds and tests in TaskCluster as the canonical build/test process.

Release:

Ben finished up work on enhanced Release Blob validation in Balrog (https://bugzil.la/703040), which makes it much more difficult to enter bad data into our update server.

You may recall Mihai, our former intern who we just hired back in November. Shortly after joining the team, he jumped into the releaseduty rotation to provide much-needed extra bandwidth. The learning curve here is steep, but over the course of the Firefox 44 release cycle, he’s taken on more and more responsibility. He’s even volunteered to do releaseduty for the Firefox 45 release cycle as well. Perhaps the most impressive thing is that he’s also taken the time to update (or write) the releaseduty docs so that the next person who joins the rotation will be that much further ahead of the game. Thanks for your hard work here, Mihai!

Operational:

Hal did some cleanup work to remove unused mozharness configs and directories from the build mercurial repos. These resources have long-since moved into the main mozilla-central tree. Hopefully this will make it easier for contributors to find the canonical copy! (https://bugzil.la/1239003)

Hiring:

We’re still hiring for a full-time Build & Release Engineer, and we are still accepting applications for interns for 2016. Come join us!

Well, I don’t know about you, but all that hard work makes me hungry for pie. See you next week!

Mozilla Foundation Demos January 22 2016

Mozilla Foundation Demos January 22 2016

Hello, SUMO Nation!

The third week of the new year is already behind us. Time flies when you’re not paying attention… What are you going to do this weekend? Let us know in the comments, if you feel like sharing :-) I hope to be in the mountains, getting some fresh (bracing) air, and enjoying nature.

The third week of the new year is already behind us. Time flies when you’re not paying attention… What are you going to do this weekend? Let us know in the comments, if you feel like sharing :-) I hope to be in the mountains, getting some fresh (bracing) air, and enjoying nature.

Thank you for reading all the way down here… More to come next week! You know where to find us, so see you around – keep rocking the open & helpful web!

Bay Area Rust meetup for January 2016. Topics TBD.

Bay Area Rust meetup for January 2016. Topics TBD.

Once a month, web developers from across the Mozilla Project get together to talk about our side projects and drink, an occurrence we like to call “Beer and Tell”.

There’s a wiki page available with a list of the presenters, as well as links to their presentation materials. There’s also a recording available courtesy of Air Mozilla.

First up was shobson with a cool demo of an animated disco ball made entirely with CSS. The demo uses a repeated radial gradient for the background, and linear gradients plus a border radius for the disco ball itself. The demo was made for use in shobson’s WordCamp talk about debugging CSS. A blog post with notes from the talk is available as well.

Next was craigcook, who presented Proton. It’s a CSS framework that is intentionally ugly to encourage use for prototypes only. Unlike other CSS frameworks, the temptation to reuse the classes from the framework in your final page doesn’t occur, which helps avoid the presentational classes that plague sites built using a framework normally.

Proton’s website includes an overview of the layout and components provided, as well as examples of prototypes made using the framework.

If you’re interested in attending the next Beer and Tell, sign up for the dev-webdev@lists.mozilla.org mailing list. An email is sent out a week beforehand with connection details. You could even add yourself to the wiki and show off your side-project!

See you next month!

A lot of exciting things are happening with Participation at Mozilla this month. Here’s a quick round-up of some of the things that are going on!

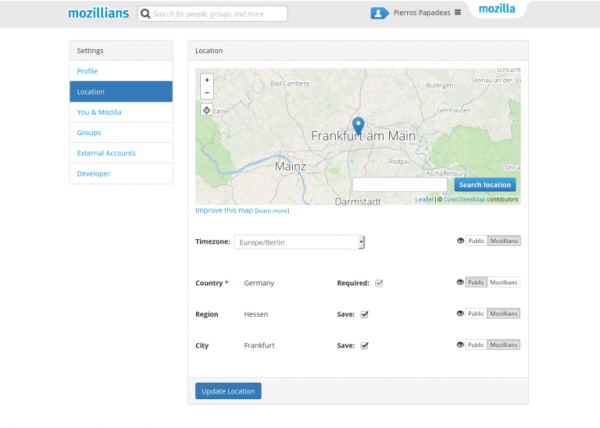

Since the start of this year, the Participation Infrastructure team has had a renewed focus on making mozillians.org a modern community directory to meet Mozilla’s growing needs.

Their first target for 2016 was to improve the UX on the profile edit interface.

”We chose it due to relatively self-contained nature of it, and cause many people were not happy with the current UX. After research of existing tools and applying latest best practices, we designed, coded and deployed a new profile edit interface (which by the way is renamed to Settings now) that we are happy to deliver to all Mozillians.”

Read the full blog here!

Are you a passionate designer looking to contribute to Mozilla? You’ll be happy to hear there is a new way to contribute to the many design projects around Mozilla! Submit issues, find collaborators, and work on open source projects by getting involved!

Learn more here.

This weekend 136 participation leaders from all over the world are heading to Singapore to undergo two days of leadership training to develop the skills, knowledge and attitude to lead Participation in 2016.

Photo credit @thephoenixbird on Twitter

If you know someone attending don’t forget to share your questions and goals with them, and follow along over the weekend by watching the hashtag #MozSummit.

Stay tuned after the event for a debrief of the weekend!

If you’re interested in learning more about all the exciting new features, projects, and plans that were presented at Mozlando look no further! You can now watch the final plenary sessions on Air Mozilla (it’s a lot of fun so I highly recommend it!) here.

Share your questions and comments on discourse here.

Look forward to more updates like these in the coming months!

This was originally posted at StaySafeOnline.org in advance of Data Privacy Day.

Data Privacy Day – which arrives in just a week – is a day designed to raise awareness and promote best practices for privacy and data protection. It is a day that looks to the future and recognizes that we can and should do better as an industry. It reminds us that we need to focus on the importance of having the trust of our users.

We seek to build trust so we can collectively create the Web our users want – the Web we all want.

That Web is based on relationships, the same way that the offline world is. When I log in to a social media account, schedule a grocery delivery online or browse the news, I’m relying on those services to respect my data. While companies are innovating their products and services, they need to be innovating on user trust as well, which means designing to address privacy concerns – and making smart choices (early!) about how to manage data.

A recent survey by Pew highlights the thought that each user puts into their choices – and the contextual considerations in various scenarios. They concluded that many participants were annoyed and uncertain by how their information was used, and they are choosing not to interact with those services that they don’t trust. This is a clear call to businesses to foster more trust with their users, which starts by making sure that there are people empowered within your company to ask the right questions: what do your users expect? What data do you need to collect? How can you communicate about that data collection? How should you protect their data? Is holding on to data a risk, or should you delete it?

It’s crucial that users are a part of this process – consumers’ data is needed to offer cool, new experiences and a user needs to trust you in order to choose to give you their data. Pro-user innovation can’t happen in a vacuum – the system as it stands today isn’t doing a good job of aligning user interests with business incentives. Good user decisions can be good business decisions, but only if we create thoughtful user-centric products in a way that closes the feedback loop so that positive user experiences are rewarded with better business outcomes.

Not prioritizing privacy in product decisions will impact the bottom line. From the many data breaches over the last few years to increasing evidence of eroding trust in online services, data practices are proving to be the dark horse in the online economy. When a company loses user trust, whether on privacy or anything else, it loses customers and the potential for growth.

Privacy means different things to different people but what’s clear is that people make decisions about the products and services that they use based on how those companies choose to treat their users. Over this time, the Internet ecosystem has evolved, as has its relationship with users – and some aspects of this evolution threaten the trust that lies at the heart of that relationship. Treating a user as a target – whether for an ad, purchase, or service – undermines the trust and relationship that a business may have with a consumer.

The solution is not to abandon the massive value that robust data can bring to users, but rather, to collect and use data leanly, productively and transparently. At Mozilla, we have created a strong set of internal data practices to ensure that data decisions align with our privacy principles. As an industry, we need to keep users at the center of the product vision rather than viewing them as targets of the product – it’s the only way to stay true to consumers and deliver the best, most trusted experiences possible.

Want to hear more about how businesses can build relationships with their users by focusing on trust and privacy? We’re holding events in Washington, D.C., and San Francisco with some of our partners to talk about it. Please join us!

Gathering data from Certificate Transparency logs, here's a snapshot in time of Let's Encrypt's certificate issuance rate per minute from 7-21 January 2016. On 20 January, DreamHost launched formal support for Let's Encrypt, which coincides with a rate increase.

Note: This is mostly an experimental post with embedding charts; I've

Gathering data from Certificate Transparency logs, here's a snapshot in time of Let's Encrypt's certificate issuance rate per minute from 7-21 January 2016. On 20 January, DreamHost launched formal support for Let's Encrypt, which coincides with a rate increase.

Note: This is mostly an experimental post with embedding charts; I've more data in the queue.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

A few days ago the fantastic Fritz from the Netherlands told me that my Hands On Web Audio slides had stopping working and there was no sound coming out from them in Firefox.

@supersole oh noes! I reopened your slides: https://t.co/SO35UfljMI and it doesn't work in @firefox anymore

(works in chrome though..

)

— Boring Stranger (@fritzvd) January 11, 2016

Which is pretty disappointing for a slide deck that is built to teach you about Web Audio!

I noticed that the issue was only on the introductory slide which uses a modified version of Stuart Memo’s fantastic THX sound recreation-the rest of slides did play sound.

I built an isolated test case (source) that used a parameter-capable version of the THX sound code, just in case the issue depended on the number of oscillators, and submitted this funnily titled bug to the Web Audio component: Entirely Web Audio generated sound cuts out after a little while, or emits random tap tap tap sounds then silence.

I can happily confirm that the bug has been fixed in Nightly and the fix will hopefully be “uplifted” to DevEdition very soon, as it was due to a regression.

Paul Adenot (who works in Web Audio and is a Web Audio spec editor, amongst a couple tons of other cool things) was really excited about the bug, saying it was very edge-casey! Yay! And he also explained what did actually happen in lay terms: “you’d have to have a frequency that goes down very very slowly so that the FFT code could not keep up”, which is what the THX sound is doing with the filter frequency automation.

I want to thank both Fritz for spotting this out and letting me know and also Stuart for sharing his THX code. It’s amazing what happens when you put stuff on the net and lots of different people use it in different ways and configurations. Together we make everything more robust

Of course also sending thanks to Paul and Ben for identifying and fixing the issue so fast! It’s not been even a week! Woohoo!

Well done everyone!

Since the start of this year, Participation Infrastructure team has a renewed focus on making mozillians.org a modern community directory to meet Mozilla’s growing needs. This will not be an one-time effort. We need to invest technically and programmatically in order to deliver a first-class product that will be the foundation for identity management across the Mozilla ecosystem.

Mozillians.org is full of functionality as it is today, but is paying the debt of being developed by 5 different teams over the past 5 years. We started simple this time. Updated all core technology pieces, did privacy and security reviews, and started the process of consolidating and modernizing many of the things we do in the site.

Our first target was Profile Edit. We chose it due to relatively self-contained nature of it, and cause many people were not happy with the current UX. After research of existing tools and applying latest best practices, we designed, coded and deployed a new profile edit interface (which by the way is renamed to Settings now) that we are happy to deliver to all Mozillians.

Have a look for yourself and don’t miss the chance to update your profile while you do it!

Have a look for yourself and don’t miss the chance to update your profile while you do it!

Nikos (on the front-end), Tasos and Nemo (on the back-end) worked hard to deliver this in a speedy manner (as they are used to), and the end result is a testament to what is coming next on Mozillians.org.

Our next target? Groups. Currently it is obscure and unclear what all those settings in groups are, what is the functionality and how teams within Mozilla will be using it. We will be tackling this soon. After that, search and stats will be our attention, in an ongoing effort to fortify mozillians.org functionality. Stay tuned, and as always feel free to file bugs and contribute in the process.

Last year I joked…

Thinking about writing a blog post listing the blog posts I’ve been meaning to write… Maybe that will save some time

— Adam Lofting (@adamlofting) November 20, 2015

Now, it has come to this.

But my most requested blog by far, is an update on the status of my shed / office that I was tagging on to the end my blog posts at this time last year. Many people at Mozfest wanted to know about the shed… so here it is.

This time last year:

Starting in the new office today. It will take time to make it *nice* but it works for now. pic.twitter.com/sWoC4kFNLc

— Adam Lofting (@adamlofting) January 28, 2015

Some pictures from this morning:

It’s a pretty nice place to work now and it doubles as useful workshop on the weekends. It needs a few finishing touches, but the law of diminishing returns means those finishing touches are lower priority than work that needs to be done elsewhere in the house and garden. So it’ll stay like this a while longer.

The benefit of being a father again (Freya my 3rd child, was born last week) is that while on paternity leave & between two baby bottles, I can hack on fun stuff.

A few months ago, I've built for my running club a Pelican-based website, check it out at : http://acr-dijon.org. Nothing's special about it, except that I am not the one feeding it. The content is added by people from the club that have zero knowledge about softwares, let alone stuff like vim or command line tools.

I set up a github-based flow for them, where they add content through the github UI and its minimal reStructuredText preview feature - and then a few of my crons update the website on the server I host. For images and other media, they are uploading them via FTP using FireSSH in Firefox.

For the comments, I've switched from Disqus to ISSO after I got annoyed by the fact that it was impossible to display a simple Disqus UI for people to comment without having to log in.

I had to make my club friends go through a minimal reStructuredText syntax training, and things are more of less working now.

The system has a few caveats though:

So I've decided to build my own web editing tool with the following features:

The first step was to build a reStructuredText parser that would read some reStructuredText and render it back into a cleaner version.

We've imported almost 2000 articles in Pelican from the old blog, so I had a lot of samples to make my parser work well.

I first tried rst2rst but that parser was built for a very specific use case (text wrapping) and was incomplete. It was not parsing all of the reStructuredText syntax.

Inspired by it, I wrote my own little parser using docutils.

Understanding docutils is not a small task. This project is very powerfull but quite complex. One thing that cruelly misses in docutils parser tools is the ability to get the source text from any node, including its children, so you can render back the same source.

That's roughly what I had to add in my code. It's ugly but it does the job: it will parse rst files and render the same content, minus all the extraneous empty lines, spaces, tabs etc.

Content browsing is pretty straightforward: my admin tool let you browse the Pelican content directory and lists all articles, organized by categories.

In our case, each category has a top directory in content. The browser parses the articles using my parser and displays paginated lists.

I had to add a cache system for the parser, because one of the directory contains over 1000 articles -- and browsing was kind of slow :)

The last big bit was the live editor. I've stumbled on a neat little tool called rsted, that provides a live preview of the reStructuredText as you are typing it. And it includes warnings !

Check it out: http://rst.ninjs.org/

I've stripped it and kept what I needed, and included it in my app.

I am quite happy with the result so far. I need to add real tests and a bit of documentation, and I will start to train my club friends on it.

The next features I'd like to add are:

The project lives here: https://github.com/AcrDijon/henet

I am not going to release it, but if someone finds it useful, I could.

It's built with Bottle & Bootstrap as well.

I wrote a long and probably dull chapter on closures and first-class and higher-order functions in Rust. It goes into some detail on the implementation and some of the subtleties like higher-ranked lifetime bounds.

I was going to post it here too, but it is really too long. Instead, pop

I wrote a long and probably dull chapter on closures and first-class and higher-order functions in Rust. It goes into some detail on the implementation and some of the subtleties like higher-ranked lifetime bounds.

I was going to post it here too, but it is really too long. Instead, pop over to the 'Rust for C++ programmers' repo and read it there.

I’m hacking on an assembly project, and wanted to document some of the tricks I was using for figuring out what was going on. This post might seem a little basic for folks who spend all day heads down in gdb or who do this stuff professionally, but I just wanted to share a quick intro to some tools that others may find useful. (oh god, I’m doing it)

If your coming from gdb to lldb, there’s a few differences in commands. LLDB has great documentation on some of the differences. Everything in this post about LLDB is pretty much there.

The bread and butter commands when working with gdb or lldb are:

You can hit enter if you want to run the last command again, which is really useful if you want to keep stepping over statements repeatedly.

I’ve been using LLDB on OSX. Let’s say I want to debug a program I can build, but is crashing or something:

1

| |

Setting a breakpoint on jump to label:

1 2 | |

Running the program until breakpoint hit:

1 2 3 4 5 6 7 8 9 10 | |

Seeing more of the current stack frame:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | |

Getting a back trace (call stack):

1 2 3 4 5 6 7 | |

peeking at the upper stack frame:

1 2 3 4 5 6 7 | |

back down to the breakpoint-halted stack frame:

1 2 3 4 5 6 7 | |

dumping the values of registers:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

read just one register:

1 2 | |

When you’re trying to figure out what system calls are made by some C code, using dtruss is very helpful. dtruss is available on OSX and seems to be some kind of wrapper around DTrace.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

If you compile with -g to emit debug symbols, you can use lldb’s disassemble

command to get the equivalent assembly:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

Anyways, I’ve been learning some interesting things about OSX that I’ll be sharing soon. If you’d like to learn more about x86-64 assembly programming, you should read my other posts about writing x86-64 and a toy JIT for Brainfuck (the creator of Brainfuck liked it).

I should also do a post on Mozilla’s rr, because it can do amazing things like step backwards. Another day…

Every new year gives you an opportunity to sit back, relax, have some scotch and re-think the passed year. Holidays give you enough free time. Even if you decide to not take a vacation around the holidays, it's usually calm and peaceful.

This time, I found myself thinking mostly about productivity, being effective, feeling busy, overwhelmed with work and other related topics.

When I started at Mozilla (almost 6 years ago!), I tried to apply all my GTD and time management knowledge and techniques. Working remotely and in a different time zone was an advantage - I had close to zero interruptions. It worked perfect.

Last year I realized that my productivity skills had faded away somehow. 40h+ workweeks, working on weekends, delivering goals in the last week of quarter don't sound like good signs. Instead of being productive I felt busy.

"Every crisis is an opportunity". Time to make a step back and reboot myself. Burning out at work is not a good idea. :)

Here are some ideas/tips that I wrote down for myself you may found useful.

This is my list of things that I try to use everyday. Looking forward to see improvements!

I would appreciate your thoughts this topic. Feel free to comment or send a private email.

Happy Productive New Year!

Hello 2016! We’re happy to announce the first Rust release of the year, 1.6. Rust is a systems programming language focused on safety, speed, and concurrency.

As always, you can install Rust 1.6 from the appropriate page on our website, and check out the detailed release notes for 1.6 on GitHub. About 1100 patches were landed in this release.

This release contains a number of small refinements, one major feature, and a change to Crates.io.

The largest new feature in 1.6 is that libcore is now stable! Rust’s

standard library is two-tiered: there’s a small core library, libcore, and

the full standard library, libstd, that builds on top of it. libcore is

completely platform agnostic, and requires only a handful of external symbols

to be defined. Rust’s libstd builds on top of libcore, adding support for

memory allocation, I/O, and concurrency. Applications using Rust in the

embedded space, as well as those writing operating systems, often eschew

libstd, using only libcore.

libcore being stabilized is a major step towards being able to write the

lowest levels of software using stable Rust. There’s still future work to be

done, however. This will allow for a library ecosystem to develop around

libcore, but applications are not fully supported yet. Expect to hear more

about this in future release notes.

About 30 library functions and methods are now stable in 1.6. Notable improvements include:

The drain() family of functions on collections. These methods let you move

elements out of a collection while allowing them to retain their backing

memory, reducing allocation in certain situations.

A number of implementations of From for converting between standard library

types, mainly between various integral and floating-point types.

Finally, Vec::extend_from_slice(), which was previously known as

push_all(). This method has a significantly faster implementation than the

more general extend().

See the detailed release notes for more.

If you maintain a crate on Crates.io, you might have seen a warning: newly uploaded crates are no longer allowed to use a wildcard when describing their dependencies. In other words, this is not allowed:

[dependencies]

regex = "*"

Instead, you must actually specify a specific version or range of

versions, using one of the semver crate’s various options: ^,

~, or =.

A wildcard dependency means that you work with any possible version of your dependency. This is highly unlikely to be true, and causes unnecessary breakage in the ecosystem. We’ve been advertising this change as a warning for some time; now it’s time to turn it into an error.

We had 132 individuals contribute to 1.6. Thank you so much!

One of the advantages of listing an add-on or theme on addons.mozilla.org (AMO) is that you’ll get statistics on your add-on’s usage. These stats, which are covered by the Mozilla privacy policy, provide add-on developers with information such as the number of downloads and daily users, among other insights.

Currently, the data that generates these statistics can go back as far as 2007, as we haven’t had an archiving policy. As a result, statistics take up the vast majority of disk space in our database and require a significant amount of processing and operations time. Statistics over a year old are very rarely accessed, and the value of their generation is very low, while the costs are increasing.

To reduce our operating and development costs, and increase the site’s reliability for developers, we are introducing an archiving policy.

In the coming weeks, statistics data over one year old will no longer be stored in the AMO database, and reports generated from them will no longer be accessible through AMO’s add-on statistics pages. Instead, the data will be archived and maintained as plain text files, which developers can download. We will write a follow-up post when these archives become available.

If you’ve chosen to keep your add-on’s statistics private, they will remain private when stats are archived. You can check your privacy settings by going to your add-on in the Developer Hub, clicking on Edit Listing, and then Technical Details.

The total number of users and other cumulative counts on add-ons and themes will not be affected and these will continue to function.

If you have feedback or concerns, please head to our forum post on this topic.

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

One of the things the Firefox team has been doing recently is having onboarding sessions for new hires. This onboarding currently covers:

My first day consisted of some useful HR presentations and then I was given my laptop and a pointer to a wiki page on building Firefox. Needless to say, it took me a while to get started! It would have been super convenient to have an introduction to all the stuff above.

I’ve been asked to do the C++ and Gecko session three times. All of the sessions are open to whoever wants to come, not just the new hires, and I think yesterday’s session was easily the most well-attended yet: somewhere between 10 and 20 people showed up. Yesterday’s session was the first session where I made the slides available to attendees (should have been doing that from the start…) and it seemed equally useful to make the slides available to a broader audience as well. The Gecko and C++ Onboarding slides are up now!

This presentation is a “living” presentation; it will get updated for future sessions with feedback and as I think of things that should have been in the presentation or better ways to set things up (some diagrams would be nice…). If you have feedback (good, bad, or ugly) on particular things in the slides or you have suggestions on what other things should be covered, please contact me! Next time I do this I’ll try to record the presentation so folks can watch that if they prefer.

Brendan is back, and he has a plan to save the Web. Its a big and bold plan, and it may just work. I am pretty excited about this. If you have 5 minutes to read along I’ll explain why I think you should be as well.

The Web is broken

Lets face it, the Web today is a mess. Everywhere we go online we are constantly inundated with annoying ads. Often pages are more ads than content, and the more ads the industry throws at us, the more we ignore them, the more obnoxious ads get, trying to catch our attention. As Brendan explains in his blog post, the browser used to be on the user’s side—we call browsers the user agent for a reason. Part of the early success of Firefox was that it blocked popup ads. But somewhere over the last 10 years of modern Web browsers, browsers lost their way and stopped being the user’s agent alone. Why?

Browsers aren’t free

Making a modern Web browser is not free. It takes hundreds of engineers to make a competitive modern browser engine. Someone has to pay for that, and that someone needs to have a reason to pay for it. Google doesn’t make Chrome for the good of mankind. Google makes Chrome so you can consume more Web and along with it, more Google ads. Each time you click on one, Google makes more money. Chrome is a billion dollar business for Google. And the same is true for pretty much every other browser. Every major browser out there is funded through advertisement. No browser maker can escape this dilemma. Maybe now you understand why no major browser ships with a builtin enabled by default ad-blocker, even though ad-blockers are by far the most popular add-ons.

Our privacy is at stake

It’s not just the unregulated flood of advertisement that needs a solution. Every ad you see is often selected based on sensitive private information advertisement networks have extracted from your browsing behavior through tracking. Remember how the FBI used to track what books Americans read at the library, and it was a big scandal? Today the Googles and Facebooks of the world know almost every site you visit, everything you buy online, and they use this data to target you with advertisement. I am often puzzled why people are so afraid of the NSA spying on us but show so little concern about all the deeply personal data Google and Facebook are amassing about everyone.

Blocking alone doesn’t scale

I wish the solution was as easy as just blocking all ads. There is a lot of great Web content out there: news, entertainment, educational content. It’s not free to make all this content, but we have gotten used to consuming it “for free”. Banning all ads without an alternative mechanism would break the economic backbone of the Web. This dilemma has existed for many years, and the big browser vendors seem to have given up on it. It’s hard to blame them. How do you disrupt the status quo without sawing off the (ad revenue) branch you are sitting on?

It takes an newcomer to fix this mess

I think its unlikely that the incumbent browser vendors will make any bold moves to solve this mess. There is too much money at stake. I am excited to see a startup take a swipe at this problem, because they have little to lose (seed money aside). Brave is getting the user agent back into the game. Browsers have intentionally remained silent onlookers to the ad industry invading users’ privacy. With Brave, Brendan makes the user agent step up and fight for the user as it was always intended to do.

Brave basically consists of two parts: part one blocks third party ad content and tracking signals. Instead of these Brave inserts alternative ad content. Sites can sign up to get a fair share of any ads that Brave displays for them. The big change in comparison to the status quo is that the Brave user agent is in control and can regulate what you see. It’s like a speed limit for advertisement on the Web, with the goal to restore balance and give sites a fair way to monetize while giving the user control through the user agent.

Making money with a better Web

The ironic part of Brave is that its for-profit. Brave can make money by reducing obnoxious ads and protecting your privacy at the same time. If Brave succeeds, it’s going to drain money away from the crappy privacy-invasive obnoxious advertisement world we have today, and publishers and sites will start transacting in the new Brave world that is regulated by the user agent. Brave will take a cut of these transactions. And I think this is key. It aligns the incentives right. The current funding structure of major browsers encourages them to keep things as they are. Brave’s incentive is to bring down the whole diseased temple and usher in a better Web. Exciting.

Quick update: I had a chance to look over the Brave GitHub repo. It looks like the Brave Desktop browser is based on Chromium, not Gecko. Yes, you read that right. Brave is using Google’s rendering engine, not Mozilla’s. Much to write about this one, but it will definitely help Brave “hide” better in the large volume of Chrome users, making it harder for sites to identify and block Brave users. Brave for iOS seems to be a fork of Firefox for iOS, but it manages to block ads (Mozilla says they can’t).

@media (-webkit-transform-3d) is a funny thing that exists on the web.

It's like, a media query feature in the form of a prefixed CSS property, which should tell you if your (once upon a time probably Safari-only) browser supports 3D transforms, invented back in the day before we had @supports.

(According to Apple docs it first appeared in Safari 4, along side the other -webkit-transition and -webkit-transform-2d hybrid-media-query-feature-prefixed-css-properties-things that you should immediately forget exist.)

Older versions of Modernizr used this (and only this) to detect support for 3D transforms, and that seemed pretty OK. (They also did the polite thing and tested @media (transform-3d), but no browser has ever actually supported that, as it turns out). And because they're so consistently polite, they've since updated the test to prefer @supports too (via a pull request from Edge developer Jacob Rossi).

As it turns out other browsers have been updated to support 3D CSS transforms, but sites didn't go back and update their version of Modernizr. So unless you support @media (-webkit-transform-3d) these sites break. Niche websites like yahoo.com and about.com.

So, anyways. I added @media (-webkit-transform-3d) to the Compat Standard and we added support for it Firefox so websites stop breaking.

But you shouldn't ever use it—use @supports. In fact, don't even share this blog post. Maybe delete it from your browser history just in case.

the following changes have been pushed to bugzilla.mozilla.org:

discuss these changes on mozilla.tools.bmo.

As an effort to reduce the APK size of Firefox for Android and to remove unnecessary code, I will be helping remove the Honeycomb code throughout the Fennec project. Honeycomb will not be supported since Firefox 46, so this code is not necessary.

Bug 1217675 will keep track of the

As an effort to reduce the APK size of Firefox for Android and to remove unnecessary code, I will be helping remove the Honeycomb code throughout the Fennec project. Honeycomb will not be supported since Firefox 46, so this code is not necessary.

Bug 1217675 will keep track of the progress.

Hopefully this will help reduce the APK size some and clean up the road for killing Gingerbread hopefully sometime in the near future.

Since June of last year, I’ve been co-founding a new startup called Brave Software with Brendan Eich. With our amazing team, we're developing something pretty epic.

We're building the next-generation of browsers for smartphones and laptops as part of our new ad-tech platform. Our terms of use give our users control over their personal data by blocking ad trackers and third party cookies. We re-integrate fewer and better ads directly into programmatic ad positions, paying revenue shares to users and publishers to support both of these essential parties in the web ecosystem.

Coming built in, we have new faster engines for tracking protection, ad block, HTTPS Everywhere, safe ads with rev-share, and more. We're seeing massive web page load time speedups.

We're starting to bring people in for early developer build access on all platforms.

I’m happy to share that the browsers we’re developing were made fully open sourced. We welcome contributors, and would love your help.

Some of the repositories include:

I put the slides for my ManhattanJS talk, "PIEfection" up on GitHub the other day (sans images, but there are links in the source for all of those).

I completely neglected to talk about the Maillard reaction, which is responsible for food tasting good, and specifically for browning pie crusts.

I put the slides for my ManhattanJS talk, "PIEfection" up on GitHub the other day (sans images, but there are links in the source for all of those).

I completely neglected to talk about the Maillard reaction, which is responsible for food tasting good, and specifically for browning pie crusts. tl;dr: Amino acid (protein) + sugar + ~300°F (~150°C) = delicious. There are innumerable and poorly understood combinations of amino acids and sugars, but this class of reaction is responsible for everything from searing stakes to browning crusts to toasting marshmallows.

Above ~330°F, you get caramelization, which is also a delicious part of the pie and crust, but you don't want to overdo it. Starting around ~400°F, you get pyrolysis (burning, charring, carbonization) and below 285°F the reaction won't occur (at least not quickly) so you won't get the delicious compounds.

(All of these are, of course, temperatures measured in the material, not in the air of the oven.)

So, instead of an egg wash on your top crust, try whole milk, which has more sugar to react with the gluten in the crust.

I also didn't get a chance to mention a rolling technique I use, that I learned from a cousin of mine, in whose baking shadow I happily live.

When rolling out a crust after it's been in the fridge, first roll it out in a long stretch, then fold it in thirds; do it again; then start rolling it out into a round. Not only do you add more layer structure (mmm, flaky, delicious layers) but it'll fill in the cracks that often form if you try to roll it out directly, resulting in a stronger crust.

Those pepper flake shakers, filled with flour, are a great way to keep adding flour to the workspace without worrying about your buttery hands.

For transferring the crust to the pie plate, try rolling it up onto your rolling pin and unrolling it on the plate. Tapered (or "French") rolling pins (or wine bottle) are particularly good at this since they don't have moving parts.

Finally, thanks again to Jenn for helping me get pies from one island to another. It would not have been possible without her!

L'omniprésence des réseaux sociaux, des moteurs de recherches et de la publicité est-elle compatible avec notre droit à la vie privée ?

L'omniprésence des réseaux sociaux, des moteurs de recherches et de la publicité est-elle compatible avec notre droit à la vie privée ?

Four score and many moons ago, I decided to move this blog from Blogger to WordPress. The transition took longer than expected, but it’s finally done.

If you’ve been following along at the old address, https://mykzilla.blogspot.com/, now’s the time to update your address book! If you’ve been going to https://mykzilla.org/, however, or you read the blog on Planet Mozilla, then there’s nothing to do, as that’s the new address, and Planet Mozilla has been updated to syndicate posts from it.

Dieser Beitrag wurde zuerst im Blog auf https://blog.mozilla.org/community veröffentlicht. Herzlichen Dank an Aryx und Coce für die Übersetzung!

Auf der ganzen Welt arbeiten leidenschaftliche Mozillianer am Fortschritt für Mozillas Mission. Aber fragt man fünf verschiedene Mozillianer, was die Mission ist, erhält man womöglich sieben verschiedene Antworten.

Am Ende des letzten Jahres legte Mozillas CEO Chris Beard klare Vorstellungen über Mozillas Mission, Vision und Rolle dar und zeigte auf, wie unsere Produkte uns diesem Ziel in den nächsten fünf Jahren näher bringen. Das Ziel dieser strategischen Leitlinien besteht darin, für Mozilla insgesamt ein prägnantes, gemeinsames Verständnis unserer Ziele zu entwickeln, die uns als Individuen das Treffen von Entscheidungen und Erkennen von Möglichkeiten erleichtert, mit denen wir Mozilla voranbringen.

Mozillas Mission können wir nicht alleine erreichen. Die Tausenden von Mozillianern auf der ganzen Welt müssen dahinter stehen, damit wir zügig und mit lauterer Stimme als je zuvor Unglaubliches erreichen können.

Deswegen ist eine der sechs strategischen Initiativen des Participation Teams für die erste Jahreshälfte, möglichst viele Mozillianer über diese Leitlinien aufzuklären, damit wir 2016 den bisher wesentlichsten Einfluss erzielen können. Wir werden einen weiteren Beitrag veröffentlichen, der sich näher mit der Strategie des Participation Teams für das Jahr 2016 befassen wird.

Das Verstehen dieser Strategie wird unabdingbar sein für jeden, der bei Mozilla in diesem Jahr etwas bewirken möchte, denn sie wird bestimmen, wofür wir eintreten, wo wir unsere Ressourcen einsetzen und auf welche Projekte wir uns 2016 konzentrieren werden.

Zu Jahresbeginn werden wir näher auf diese Strategie eingehen und weitere Details dazu bekanntgeben, wie die diversen Teams und Projekte bei Mozilla auf diese Ziele hinarbeiten.

Der aktuelle Aufruf zum Handeln besteht darin, im Kontext Ihrer Arbeit über diese Ziele nachzudenken und darüber, wie Sie im kommenden Jahr bei Mozilla mitwirken möchten. Dies hilft, Ihre Innovationen, Ambitionen und Ihren Einfluss im Jahr 2016 zu gestalten.

Wir hoffen, dass Sie mitdiskutieren und Ihre Fragen, Kommentare und Pläne für das Vorantreiben der strategischen Leitlinien im Jahr 2016 hier auf Discourse teilen und Ihre Gedanken auf Twitter mit dem Hashtag #Mozilla2016Strategy mitteilen.

Unsere Mission

Dafür zu sorgen, dass das Internet eine weltweite öffentliche Ressource ist, die allen zugänglich ist.

Unsere Vision

Ein Internet, für das Menschen tatsächlich an erster Stelle stehen. Ein Internet, in dem Menschen ihr eigenes Erlebnis gestalten können. Ein Internet, in dem die Menschen selbst entscheiden können sowie sicher und unabhängig sind.

Unsere Rolle

Mozilla setzt sich im wahrsten Sinne des Wortes in Ihrem Online-Leben für Sie ein. Wir setzen uns für Sie ein, sowohl in Ihrem Online-Erlebnis als auch für Ihre Interessen beim Zustand des Internets.

Unsere Arbeit

Unsere Säulen

Wir wir positive Veränderungen in Zukunft anpacken wollen

Die Arbeitsweise ist ebensowichtig wie das Ziel. Unsere Gesundheit und bleibender Einfluss hängen davon ab, wie sehr unsere Produkte und Aktivitäten:

the following changes have been pushed to bugzilla.mozilla.org:

discuss these changes on mozilla.tools.bmo.

Last week we ran an internal “hack day” here at the Mozilla space in London. It was just a bunch of software engineers looking at various hardware boards and things and learning about them

Here’s what we did!

I essentially kind of bricked my Arduino Duemilanove trying to get it working with Johnny Five, but it was fine–apparently there’s a way to recover it using another Arduino, and someone offered to help with that in the next NodeBots London, which I’m going to attend.

Thinks he’s having issues with cables. It seems like the boards are not reset automatically by the Arduino IDE nowadays? He found the button in the board actually resets the board when pressed i.e. it’s the RESET button.

On the Raspberry Pi side of things, he was very happy to put all his old-school Linux skills in action configuring network interfaces without GUIs!

Played with mDNS advertising and listening to services on Raspberry Pi.

(He was very quiet)

(He also built a very nice LEGO case for the Raspberry Pi, but I do not have a picture, so just imagine it).

Wilson: “I got my Raspberry Pi on the Wi-Fi”

Francisco: “Sorry?”

Wilson: “I mean, you got my Raspberry Pi on the network. And now I’m trying to build a web app on the Pi…”

Exploring the Pebble with Linux. There’s a libpebble, and he managed to connect…

(sorry, I had to leave early so I do not know what else did Chris do!)

Updated, 20 January: Chris told me he just managed to successfully connect to the Pebble watch using the bluetooth WebAPI. It requires two Gecko patches (one regression patch and one obvious logic error that he hasn’t filed yet). PROGRESS!

~~~

So as you can see we didn’t really get super far in just a day, and I even ended up with unusable hardware. BUT! we all learned something, and next time we know what NOT to do (or at least I DO KNOW what NOT to do!).

Back in December I got a desperate email from this person. A woman who said her Instagram had been hacked and since she found my contact info in the app she mailed me and asked for help. I of course replied and said that I have nothing to do with her being hacked but I also have nothing to do with Instagram other than that they use software I’ve written.

Today she writes back. Clearly not convinced I told the truth before, and now she strikes back with more “evidence” of my wrongdoings.

Dear Daniel,

I had emailed you a couple months ago about my “screen dumps” aka screenshots and asked for your help with restoring my Instagram account since it had been hacked, my photos changed, and your name was included in the coding. You claimed to have no involvement whatsoever in developing a third party app for Instagram and could not help me salvage my original Instagram photos, pre-hacked, despite Instagram serving as my Photography portfolio and my career is a Photographer.

Since you weren’t aware that your name was attached to Instagram related hacking code, I thought you might want to know, in case you weren’t already aware, that your name is also included in Spotify terms and conditions. I came across this information using my Spotify which has also been hacked into and would love your help hacking out of Spotify. Also, I have yet to figure out how to unhack the hackers from my Instagram so if you change your mind and want to restore my Instagram to its original form as well as help me secure my account from future privacy breaches, I’d be extremely grateful. As you know, changing my passwords did nothing to resolve the problem. Please keep in mind that Facebook owns Instagram and these are big companies that you likely don’t want to have a trail of evidence that you are a part of an Instagram and Spotify hacking ring. Also, Spotify is a major partner of Spotify so you are likely familiar with the coding for all of these illegally developed third party apps. I’d be grateful for your help fixing this error immediately.

Thank you,

[name redacted]

P.S. Please see attached screen dump for a screen shot of your contact info included in Spotify (or what more likely seems to be a hacked Spotify developed illegally by a third party).

Here’s the Instagram screenshot she sent me in a previous email:

I’ve tried to respond with calm and clear reasonable logic and technical details on why she’s seeing my name there. That clearly failed. What do I try next?

I was recently asked an excellent question when I promoted the LFNW CFP on IRC:

As someone who has never done a talk, but wants to, what kind of knowledge do you need about a subject to give a talk on it?

If you answer “yes” to any of the following questions, you know enough to propose a talk:

I personally try to propose talks I want to hear, because the dealine of a CFP or conference is great motivation to prioritize a cool project over ordinary chores.

Howdy mozillians!

Last week – on Friday, January 15th – we held Aurora 45.0 Testday; and, of course, it was another outstanding event!

Thank you all – – for the participation!

A big thank you to all our active moderators too!

Results:

I strongly advise everyone of you to reach out to us, the moderators, via #qa during the events when you encountered any kind of failures. Keep up the great work! \o/

And keep an eye on QMO for upcoming events!

For the last three years I have had the opportunity to send out a reminder to Mozilla staff that Martin Luther King Jr. Day is coming up, and that U.S. employees get the day off. It has turned into my MLK Day eve ritual. I read his letters, listen to speeches, and then I compose a belabored paragraph about Dr. King with some choice quotes.

If you didn’t get a chance to celebrate Dr. King’s legacy and the movements he was a part of, you still have a chance:

As I outlined in an earlier post, libmacro is a new crate designed to be used by procedural macro authors. It provides the basic API for procedural macros to interact with the compiler. I expect higher level functionality to be provided by library crates. In this post I'll go into

As I outlined in an earlier post, libmacro is a new crate designed to be used by procedural macro authors. It provides the basic API for procedural macros to interact with the compiler. I expect higher level functionality to be provided by library crates. In this post I'll go into a bit more detail about the API I think should be exposed here.

This is a lot of stuff. I've probably missed something. If you use syntax extensions today and do something with libsyntax that would not be possible with libmacro, please let me know!

I previously introduced MacroContext as one of the gateways to libmacro. All procedural macros will have access to a &mut MacroContext.

I described the tokens module in the last post, I won't repeat that here.

There are a few more things I thought of. I mentioned a TokenStream which is a sequence of tokens. We should also have TokenSlice which is a borrowed slice of tokens (the slice to TokenStream's Vec). These should implement the standard methods for sequences, in particular they support iteration, so can be maped, etc.

In the earlier blog post, I talked about a token kind called Delimited which contains a delimited sequence of tokens. I would like to rename that to Sequence and add a None variant to the Delimiter enum. The None option is so that we can have blocks of tokens without using delimiters. It will be used for noting unsafety and other properties of tokens. Furthermore, it is useful for macro expansion (replacing the interpolated AST tokens currently present). Although None blocks do not affect scoping, they do affect precedence and parsing.

We should provide API for creating tokens. By default these have no hygiene information and come with a span which has no place in the source code, but shows the source of the token to be the procedural macro itself (see below for how this interacts with expansion of the current macro). I expect a make_ function for each kind of token. We should also have API for creating macros in a given scope (which do the same thing but with provided hygiene information). This could be considered an over-rich API, since the hygiene information could be set after construction. However, since hygiene is fiddly and annoying to get right, we should make it as easy as possible to work with.

There should also be a function for creating a token which is just a fresh name. This is useful for creating new identifiers. Although this can be done by interning a string and then creating a token around it, it is used frequently enough to deserve a helper function.

Procedural macros should report errors, warnings, etc. via the MacroContext. They should avoid panicking as much as possible since this will crash the compiler (once catch_panic is available, we should use it to catch such panics and exit gracefully, however, they will certainly still meaning aborting compilation).

Libmacro will 're-export' DiagnosticBuilder from syntax::errors. I don't actually expect this to be a literal re-export. We will use libmacro's version of Span, for example.

impl MacroContext {

pub fn struct_error(&self, &str) -> DiagnosticBuilder;

pub fn error(&self, Option<Span>, &str);

}

pub mod errors {

pub struct DiagnosticBuilder { ... }

impl DiagnosticBuilder { ... }

pub enum ErrorLevel { ... }

}

There should be a macro try_emit!, which reduces a Result<T, ErrStruct> to a T or calls emit() and then calls unreachable!() (if the error is not fatal, then it should be upgraded to a fatal error).

The simplest function here is tokenize which takes a string (&str) and returns a Result<TokenStream, ErrStruct>. The string is treated like source text. The success option is the tokenised version of the string. I expect this function must take a MacroContext argument.

We will offer a quasi-quoting macro. This will return a TokenStream (in contrast to today's quasi-quoting which returns AST nodes), to be precise a Result<TokenStream, ErrStruct>. The string which is quoted may include metavariables ($x), and these are filled in with variables from the environment. The type of the variables should be either a TokenStream, a TokenTree, or a Result<TokenStream, ErrStruct> (in this last case, if the variable is an error, then it is just returned by the macro). For example,

fn foo(cx: &mut MacroContext, tokens: TokenStream) -> TokenStream {

quote!(cx, fn foo() { $tokens }).unwrap()

}

The quote! macro can also handle multiple tokens when the variable corresponding with the metavariable has type [TokenStream] (or is dereferencable to it). In this case, the same syntax as used in macros-by-example can be used. For example, if x: Vec<TokenStream> then quote!(cx, ($x),*) will produce a TokenStream of a comma-separated list of tokens from the elements of x.

Since the tokenize function is a degenerate case of quasi-quoting, an alternative would be to always use quote! and remove tokenize. I believe there is utility in the simple function, and it must be used internally in any case.

These functions and macros should create tokens with spans and hygiene information set as described above for making new tokens. We might also offer versions which takes a scope and uses that as the context for tokenising.

There are some common patterns for tokens to follow in macros. In particular those used as arguments for attribute-like macros. We will offer some functions which attempt to parse tokens into these patterns. I expect there will be more of these in time; to start with:

pub mod parsing {

// Expects `(foo = "bar"),*`

pub fn parse_keyed_values(&TokenSlice, &mut MacroContext) -> Result<Vec<(InternedString, String)>, ErrStruct>;

// Expects `"bar"`

pub fn parse_string(&TokenSlice, &mut MacroContext) -> Result<String, ErrStruct>;

}

To be honest, given the token design in the last post, I think parse_string is unnecessary, but I wanted to give more than one example of this kind of function. If parse_keyed_values is the only one we end up with, then that is fine.

The goal with the pattern matching API is to allow procedural macros to operate on tokens in the same way as macros-by-example. The pattern language is thus the same as that for macros-by-example.

There is a single macro, which I propose calling matches. Its first argument is the name of a MacroContext. Its second argument is the input, which must be a TokenSlice (or dereferencable to one). The third argument is a pattern definition. The macro produces a Result<T, ErrStruct> where T is the type produced by the pattern arms. If the pattern has multiple arms, then each arm must have the same type. An error is produced if none of the arms in the pattern are matched.